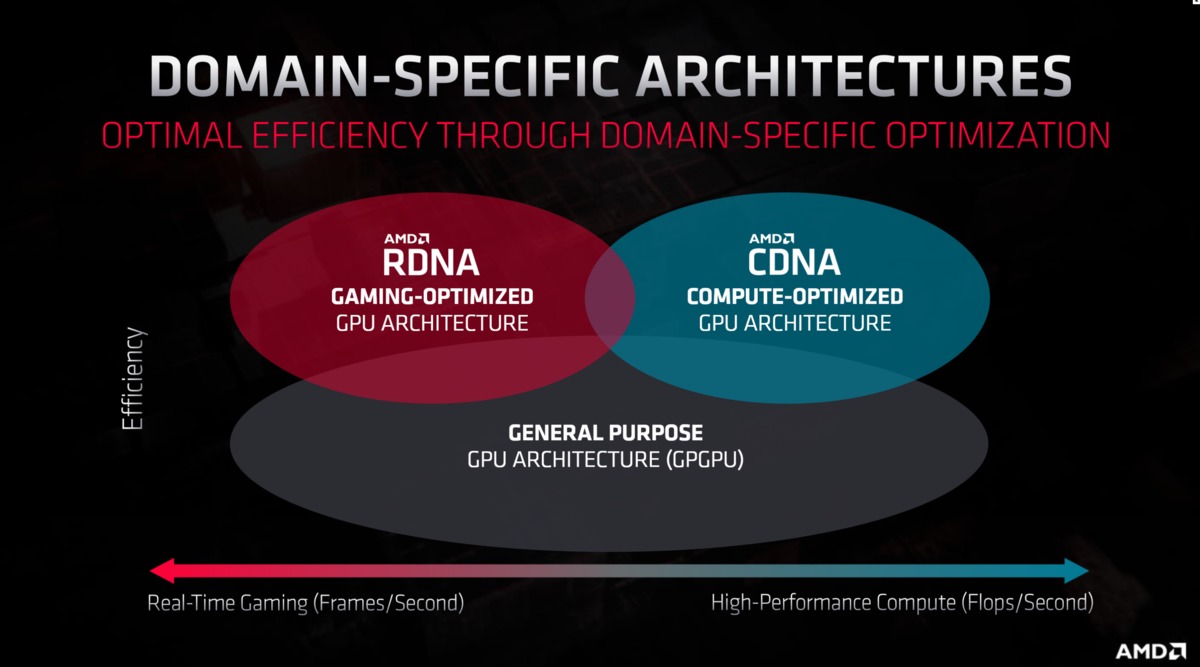

AMD gave us a glimpse of its future this week, divulging new information about its Radeon and Ryzen roadmaps during the company’s Financial Analyst Day. Lots of new information was disseminated, but as PCWorld’s resident GPU expert, the part that jumped out at me was the introduction of “CDNA,” a new, compute-specific graphics architecture built for data centers. No, not because I’ve suddenly become interested in crunching numbers, but because sharing a singular architecture for consumers and workstations gave Radeon graphics cards some notable benefits—and drawbacks—over the years.

What will CDNA’s debut mean for the “RDNA”-based consumer Radeon graphics cards that PC gamers buy? It’s too soon to tell for sure, but we can dig into some of the potential ramifications.

One arch to rule them all

Traditionally, each generation of AMD’s various Radeon graphics offerings revolved around the same underlying architecture. Radeon RX Vega graphics cards, the radical Radeon Pro SSG, and Radeon Instinct server accelerators all used AMD’s Vega GPUs, for example, though the software and warranties available for each differ. By comparison, Nvidia creates different GPU configurations for its data center and consumer graphics products.

Brad Chacos/IDG

Brad Chacos/IDGAMD used its HBM-enhanced Radeon Vega GPUs in consumer cards, workstation cards, and server accelerators.

Using a single architecture helped AMD compete in several arenas—consumer, workstations, and data centers—without having to spend a fortune developing bespoke solutions for each. Creating GPUs is very expensive. Frankly, AMD might not have been able to afford creating multiple concurrent GPU architectures before, as the company was bleeding cash and almost (almost) on its last legs before it rose from the ashes on the back of Ryzen. Cutting the same pie into several pieces was prudent. It also came with both advantages and disadvantages.

On the negative side, reusing the same GPU architecture everywhere meant that AMD’s various Radeon GPU products were often the jack of all trades, but master of none. While Nvidia crafted Tesla GPUs perfectly tailored for data center precision, Radeon Instinct cards were saddled with some hardware better suited for rendering games, while Radeon graphics cards were infused with some internals better suited for compute tasks.

AMD

AMDThat’s not all bad though. Remember how coveted Radeon graphics cards were during the Bitcoin mining boom a couple of years back? You can thank those data-centric bits. Likewise, AMD’s heavy push towards async compute leveraged those capabilities, and helped spark the rise of technologies such as DirectX 12 and Vulkan on the PC. AMD had a leg-up on those workloads for half a decade. Nvidia only caught up to AMD’s async compute capabilities with the Turing GPU architecture inside RTX 20-series graphics cards.

The right tool for the right job

That’s chaning though. With RDNA for consumer…

https://www.pcworld.com/article/3531413/amd-cdna-compute-gpus-radeon-rdna-graphics-cards.html#tk.rss_all