A report this week by VRT NWS seemingly outed Google workers for listening to customers’ Assistant recordings. Now Google desires you to perceive that they had been simply doing their job.

The Belgian broadcaster obtained ahold of the recordings after Dutch audio knowledge was leaked by a Google worker. VRT says they acquired greater than a thousand Google Assistant excerpts within the file dump, and they “could clearly hear addresses and other sensitive information.” The outlet then was ready to match recordings to the folks who made them.

It all appears like a privateness pitfall, however a put up by Google desires to guarantee you that the issue stems from the leak, not the recordings themselves. In a weblog put up, Google defended the actions as “critical” to the Assistant growth course of, however acknowledged that there could also be points with its inside safety:

“We just learned that one of these language reviewers has violated our data security policies by leaking confidential Dutch audio data. Our Security and Privacy Response teams have been activated on this issue, are investigating, and we will take action. We are conducting a full review of our safeguards in this space to prevent misconduct like this from happening again.”

As Google explains, language experts “only review around 0.2 percent of all audio snippets,” which “are not associated with user accounts as part of the review process.” The firm indicated that these snippets are taken at random and harassed that reviewers “are directed not to transcribe background conversations or other noises, and only to transcribe snippets that are directed to Google.”

That’s putting a number of religion in its workers, and it doesn’t sound like Google plans on truly altering its follow. Rather, Google pointed customers to its new software that allows you to auto-delete your knowledge each three months or 18 months, although it’s unclear how that will mitigate bigger privateness considerations.

Potential privateness issues

In the recordings it acquired, VRT mentioned it uncovered a number of cases the place conversations had been recorded regardless that the “Hey Google” immediate wasn’t uttered. That, too, raises severe crimson flags, however Google insists that the speaker heard an analogous phrase, which triggered it to activate, calling it a “false accept.”

Michael Brown / IDG

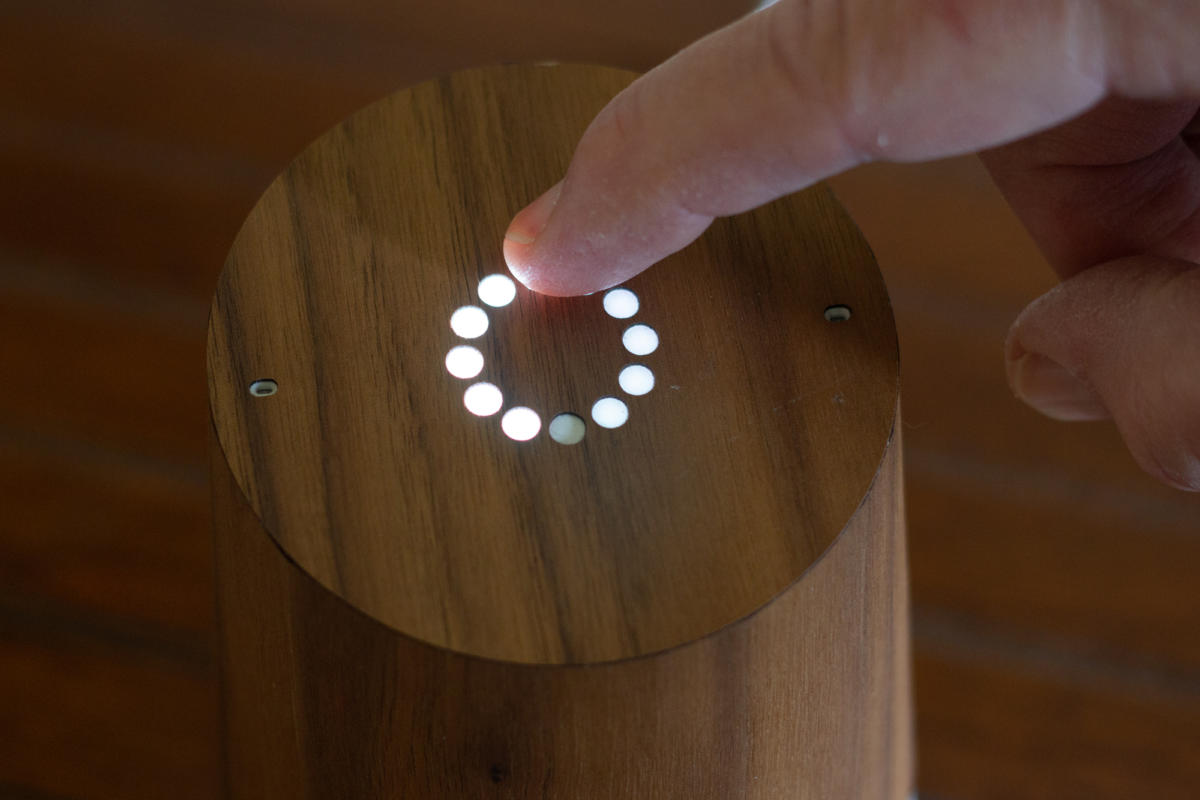

Michael Brown / IDGThe LED lights on the high of the Google Home lets you understand it’s listening.

While that’s actually a logical clarification, and one which anybody with a wise speaker has skilled, it’s not precisely reassuring. Since we now have affirmation that Google workers are randomly listening to recordings, together with so-called false accepts, folks might be listening to all types of issues that we don’t need them to hear. And whereas Google says it has “a number of protections in place” to stop towards unintended recordings, clearly some circumstances are nonetheless getting via, together with, in accordance to VRT, “conversations between mother and father and their kids, but additionally blazing rows and…

https://www.pcworld.com/article/3409036/google-defends-assistant-recordings-transcription.html#tk.rss_all